Superheros Neil and Nolan (N&N) just gave their talk on our real-time PuSH system at Web 2.0 in New York. You can download the slides, or view the contents I’ve oh-so-roughly transcribed here:

Oh Hai My name is Nils.

My name is Neil Walker, I’m an Engineer at Flickr.

I mostly work on the back-end infrastructure portion of things. I’m going to be telling you about a system Nolan and I built to send real-time updates about things that happen on Flickr out to the things and people that want to know about them.

My portion of the talk is mostly going to be concerned with the back-end. How did we build the guts of the system and what we think are the key components.

What I’m not talking about.

This talk isn’t about building Twitter, or a full-scale pubsub system built from the ground-up to handle millions of updates per second. Instead it’s geared around what you can do with less. What if you’ve got an existing site with lots of functionality already built and you want to add some real-time notification capabilities to it? What can you do if you don’t have a lot of resources to throw at the problem?

It turns out that you can get pretty far with some bits and pieces that many sites will already have, and how to fit those bits together is what we’re going to cover.

This is Nolan

Hi, I’m Nolan Caudill, a backend engineer at Flickr. I work on most of our backend systems, but focus mainly on geo, the API, internationalization and localization, and general site performance.

The Fun Part

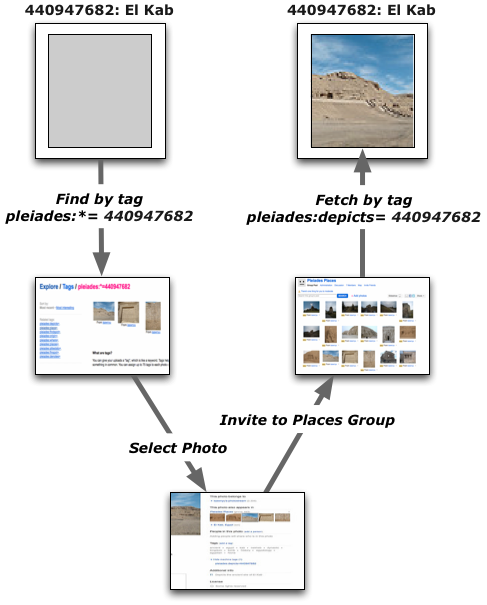

I’m going to focus mainly on why you would want to use the new push api, what problems it solves, and why it’s better in some cases than our traditional pull-based APIs. Also, I’m going to talk about how you get up and running with the new APIs. We know it’s a bit of mindflip from the traditional APIs and we want to help you with the transition on getting up and running with it.

So this was the Wired cover for March 1997. From the article: “Media that merrily slip across channels, guiding human attention as it skips from desktop screen to phonetop screen to a car windshield. These new interfaces work on the emerging universe of networked media that are spreading across the telecosm.” Whatever that means. So that was almost 15 years ago. “Kiss your browser goodbye”! didn’t exactly pan out.

Flickr PuSH

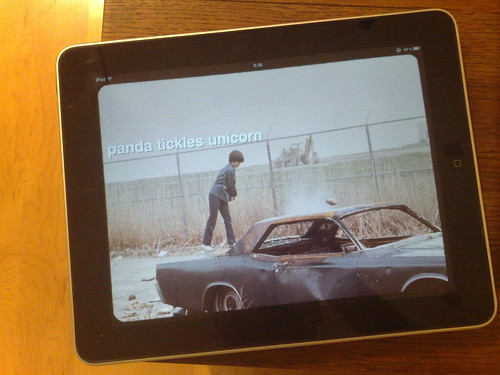

But jumping forward to now, we DO have all those screens. Most of us have 3, 4 or more devices with high-resolution displays on them, almost all of them with web browsers, and varying levels of human interfaces. Some of them even have cameras. Lots of us have 2nd or even 3rd monitors at work. For Flickr, having apps that can be used to explore photos on all those different screens regardless of user interface seems only natural, and that’s what we hoped to inspire with a real-time API. Before we get into the details I’d like to show a simple little application that Aaron Cope, who used to work at Flickr, built on top of our PUSH API, just to see what would happen.

Basically it’s a simple web page that can be run full-screen on almost any device that has a browser. It lets you subscribe to various streams on Flickr like photos from your friends, photos that your friends favorite, photos with particular tags, and photos from a location. It then receives these photos in more or less real-time and displays them full-screen with the title overlaid on top of the photo.

That’s it. No controls, no user interface after the initial point of telling it what you’re interested in.

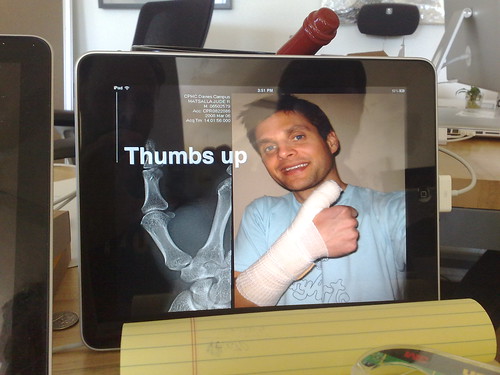

So Flickr being a social website, you start to get photos of your friends, and the things that happen to them. You also get photos of things that your friends are interested in. As they fave them, they show up in your live stream. Then you might fave the same image, and more of your friends see it, fave it, and so on. It’s a very natural way for a popular object to percolate around your network, and often you don’t have to worry about missing something good because if it’s popular it’ll bubble back up as another one of your contacts faves it later on.

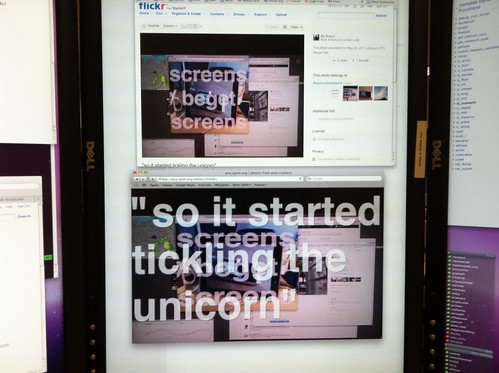

Interesting things start happening when you have devices with screens AND cameras – a photo makes it into someone’s stream, they take a photo of it and upload that, which makes it back into the original uploader’s stream, who faves it, etc. and you get a sort of live conversation taking place with photos. Knowing that when you upload a photo it’s going to appear on someone’s monitor or widescreen TV adds a new dimension to photosharing.

Eventually it can get a bit ridiculous.

A bit of history.

Anyway, we’ll go back to how all this got started at Flickr.

– A while ago we had the need to get new public uploads/updates to search partners in a timely manner. Why? – Being crawled is fine but is expensive for the crawler and crawlee and isn’t always accurate. – Making a specific API for that purpose could do it but is probably going to be clunky, require too much work by both parties, and means that whatever it is that’s hitting your API can potentially affect your site performance, or other API users. Pushing out updates where you control the flow is really the way to go. – What did we build?

‘headers’ => array( ‘Content-type’ => ‘application/atom+xml’, ‘User-Agent’ => ‘Flickr Kitten Hose’ )

Basically a firehose of public uploads/updates (incl. deletions/privacy changes) that we can aim somewhere and turn it on. Similar to the Twitter firehose, but for photos uploaded to Flickr. So essentially stuff happens on Flickr, we transform the events into some easily parseable format, bundle everything up into reasonable-sized blobs and POST it to a web server somewhere that consumes the data.

PubSubHubbub: Thanks, Google

Rather than invent a specific format/protocol we decided to pick something familiar. Something that already has a spec, is well-documented and is well-understood. So we picked a common Publish-Subscribe protocol. Google’s PubSubHubbub has definitions for how to publish a feed to a hub, how to subscribe and unsubscribe to topics and verify subscriptions and all the other good things that people who write specs like to specify. Of course we didn’t actually need a lot of the spec to accomplish our goals for just the firehose bit, but we did have in the back of our minds the thought of expanding on what we built.

So choosing at the start to meet an accepted spec was probably a good idea, and allowed us to get going without having to think too hard about what might come later – because someone else already figured out how that stuff should work.

What?

A hub with no subscription control and a single hard- coded endpoint. If you want to fit the firehose idea into the PubSub metaphor then essentially what we built at first was a hub (i.e all of Flickr) that has one hard-coded “topic” that can be subscribed to – namely all public uploads & updates. Except that the subscribing and unsubscribing part involves humans agreeing to things and then turning the firehose on, instead of machines POSTing to web servers and receiving callbacks, etc. But the pubsub metaphor still fits.

How?

So how did we build it? What I’m going to get into now are what I think are the key pieces of the back-end. I’ll be glossing over some parts of it that are pretty basic and not terribly interesting in the interests of focusing on the good stuff.

Async task system Gearman, etc

The first (and probably the most critical) part of what we built is an asynchronous job queue, or what we often call our Offline Task System. Essentially it’s a way of de-coupling expensive work from the web servers. Sooner or later you end up wanting to perform an operation that takes longer than you want to make a user at the other end wait (or places more load on your web servers than you care to suffer) and so you want to off-load that work somewhere else.

We use this concept EVERYWHERE. Examples: Modifying/deleting large batches of photos. Computing recommendations for who you might want to add as a contact. We have several hundred different tasks, and there are often thousands of them running in parallel. We built our own that fits our specific needs, but a great open-source example is Gearman. Mature, easy to set up and use and scales quite well.

Stuff happens on flickr.

So the very start of the flow of our system begins when stuff happens on Flickr. The obvious example is a photo upload, often involving cats. Other things we’re interested in are updates: Changing the title, the description or other meta-data of a photo. Also we want to provide updates when photos are deleted (or have their visibility switched to private, which in terms of updates should look the same as a deletion)

Insert a task. olt::insert(‘push_new_photo’, $photo_id);

When any of these things happen, we simply insert a task into our offline task system. It’s very low cost, doesn’t block and once that’s complete everything else that happens is completely de-coupled for the web servers.

Task runs.

Insert the event into a queue When the task runs, all it does is take the event it represents, transforms it into a little blob of JSON and sticks it in a queue.

Redis Lists

RPUSH kitten_hose blob_of_json

For the queues we chose to use Redis Lists. For those of you not familiar with Redis yet, it’s an open-source, memory-only, key-value store that supports structured data. Think memcached with commonly-used data structures like hashes, sets, and lists and efficient operations on them. At this point Redis is fairly stable and reliable, the performance is fantastic, and it’s dead-simple to use.

We accept a little bit of a HA compromise for the fact that it’s RAM-only and not clustered (yet), so if it’s down you’ll drop some updates on the floor. But our goal here is just building a firehose for updates – not a 100%-reliable archive of user activity. We can happily accept the trade-offs that using Redis implies.

Uhhh

Why didn’t we just insert into the queue in the first place? At this point it might occur to you that instead of inserting a task (into a queue) that when it runs just inserts something else into another queue, why not just insert the thing into the queue in the first place? We’ll get to that in a bit.

However, two useful properties of our task system are that 1) you can insert tasks with a delay before they run, and 2) task inserts with the same arguments as an existing task fail silently – i.e. you only get the one task.

The upshot is that you can set a delay on things like updates so that a number of updates that happen over a short period (example) only generate a single task – and when it runs it finds all of them.

Then what happens?

Summary:

So at this point you’ve got events happening all over Flickr, uploads and updates (around 100/s depending on the time of day), all of them inserting tasks, and the task system grabbing each event and sticking it in a queue, in the form of a Redis list.

The next step is obviously to consume the queue.

Cron

- Insert more tasks!

- Consume the queue

- LPOP kitten_hose

You could have a daemon that sits there and consumes the queue and makes posts to the endpoint and you’re done. Basically what we do is have a cron job that periodically looks at the queue and inserts one or more tasks (depending on the size of the queue) whose job is to drain a particular number of updates from the queue and send them to the endpoint.

At first this is going to seem needlessly complicated but you’ll see why when we get to generalize the system for an arbitrary number of subscribers. One thing it does however is provide a convenient way to throttle and buffer the output – depending on what your endpoint can handle you can twiddle the knobs on your task system to run more jobs in parallel, or turn it down to choke off the firehose (and maybe drop updates on the floor if the queue gets too big…). It gives you flexibility by decoupling the delivery mechanism.

Draw me a picture

It’s probably time for a simple diagram: Things happening on Flickr trigger the creation of tasks, that run asynchronously, and stuff things into a queue in Redis. Periodically more tasks get triggered to drain the queue and push whatever they find back out to the eventual destination.

It worked pretty well.

- Didn’t take the site down

- Backfill 3B photos

So it turns out that it all actually worked pretty well.

The decoupling of everything using the asynchronous task approach meant that it had pretty much zero impact on the rest of the site. We could develop it live, experiment with capacity etc without having any fear of impacting site performance for our users because of how loosely-coupled all the pieces were – if anything goes wrong the damage is limited to that little piece.

Eventually we decided to run a backfill to a 3rd-party search index of all of our public photos back to the beginning of Flickr (somewhere around 3 billion) and we were able to complete it in 2 weeks, using our existing task system and the only new hardware being one redis box for the queues. So, it worked really well!

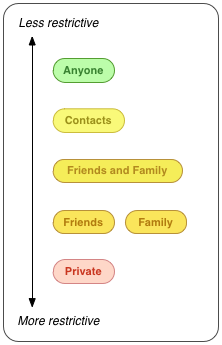

Photos from your friends

So we started to ask what if we wanted to make it into something that might be useful on an individual level? It seemed to be pretty reliable and low-impact to the site and showed promise of scaling fairly well. The obvious thing that would be of interest on a user level would be photos from your contacts; Here’s my endpoint, POST stuff to me when my friends upload new photos (or change existing ones).

Other things that could be of interest are when your contacts favorite a photo, or when you or your contacts are tagged in a photo, or when someone anywhere on Flickr tags a photo with the tag “kitten”. But let’s start with photos from your contacts.

What?

- A list of users (we have those!)

- An endpoint (URL)

Looking at what we’d need to add to the system to support user-specific subscriptions there’s the obvious: a record of the subscription somewhere in a database, the callback mechanism as per the pubhub spec etc. I’m going to gloss over that because it’s pretty straightforward and not really interesting. What we need to look at to manage the updates and figure out who gets what though is basically a mapping of users (the contacts who upload photos) to endpoints (i.e. subscribers).

Moar Redis

SADD user_1234 endpoint_5678

So we turned to redis again. Redis offers a set datastructure that we can use to maintain this relationship pretty easily. When someone wants to subscribe to photos from their contacts, we create a redis set for each of their contacts and add to it a pointer to the endpoint.

The task again.

SMEMBERS user_1234

foreach $endpoint RPUSH $endpoint json_blob

So now we go back to the Task that gets inserted in response to something happening on Flickr. Where it used to just insert an event into “The” single queue, now it looks at the set of endpoints that are interested in uploads from that user and inserts the event into the queue for each of them. Hopefully now you can see why we have a task do this rather than do it on the web server while the user waits – it’s still going to be quick because the queue inserts in redis are constant-time, but if you have a lot of contacts they could add up. Best to just de-couple it all and not worry about running into that problem.

Cron again.

Insert tasks for each queue (endpoint)

Now we turn to draining the queues, and again you can see where the task system comes in. With a large number of endpoints all the cron job has to do is run through each of them, look at how many events need to be consumed and insert an appropriate number of tasks. So scaling the output part of the system (and actually most of the input too) then becomes a matter of scaling your task system – and that’s a problem that’s already been solved.

Cache is your friend.

One thing that plays a key role in the system that I haven’t talked about is a caching layer. Almost any site of a reasonable size these days has a memcached layer (or something similar) in between the front-ends and the databases. One of the great things this for a push system is that because you’re dealing with things as they happen, all the objects that you typically need to access to build your update stream are almost always in cache – because they were just accessed.

So it turns out that not only does a system like this have almost no impact on your normal page serving time (because of all the de-coupling), it also ends up having very little impact on your databases, due most things being in cache.

Redis Numbers

- 1000 subscriptions

- 50K keys (queues) / 300 MB

- 100 qps – 8-core / 8GB 5% cpu

And a few numbers showing how Redis is performing as our little DIY queueing and subscription system. 1000 subscriptions for various different things takes around 50K keys, consuming 300 MB of ram, and at about 100 qps on an 8-core Linux box it’s barely ticking over.

3 Things: Cache, Tasks, & Queues

So putting all the pieces together it’s actually pretty simple, and relies upon things that you probably already have: a caching layer, some kind of asynchronous task system and rudimentary queueing system. Even if you don’t have all of these they’re pretty well-understood pieces and there are lots of open-source options to choose from: Just grab memcached, gearman, and redis and off you go.

We think you can go a long way to building the back-end of a decent push update system with just these simple pieces. So that’s the end of my portion of the talk, and now I’ll turn it over to Nolan to talk more about the front-end, and how to make it easier for a client to consume updates.

Why Push? And what’s in it for me.

So now that Neil’s explained the original reasoning and how we built a system for real-time push, the question is why, as an API consumer, would you want to use it which leads into how to get started with it.

Flickr API = Great Success

Tens of thousands of keys making hundreds of millions of calls per day. By any measure, Flickr’s tradtional pull-based API has been incredibly successful. Developers that have used our API range from Fortune 10 businesses to PhD students to hundreds of thousands of other developers that just want to do something fun with photos and the data around them.

I checked the numbers this wekend and and on average, we’ve got around 10,000 different API keys making hundreds of millions of calls per day. It’s easy. Just use your browser.

One major reason for its popularity is due to how easy it is to use. When you combine data that people want with an API that’s easy to use, you thousands and thousands of apps built with it.

There’s effectively no barrier to entry to get started with using Flickr’s API, if you have a browser, you can access every single API method. And if you want to do this programatically server-side , say a nightly-cronjob that fetches your own uploads, you can can just do a simple ‘curl’. Either way, our pull-based API is a one-liner: whether that line is a URL in your browser, or a one-line shell script.

We’ll curl it for you.

It’s even simpler than that, if you need it to be. Even if you don’t have a server, or don’t want to read our documentation, just use the API Explorer on Flickr. Every API method we publicly support is represented here, not just in documentation, but on an actual real-life form for you to build queries with. Just fill out the form, press enter, and we’ll curl it for you and spit out the results in pretty JSON or XML format.

One side benefit of this pull-based API is that it also makes debugging easy. You can run tons of API queries as fast as you can debug. This is huge when building a new system. If you formed the call correctly, you get data. If you messed up, modify, rinse, and repeat. Flickr is a visual news feed.

So back to “why push”…

I’m the kind of programmer that needs to have a fairly-focused work environment, but I also like to know what’s going on in the world at the same time. Twitter is a really great news-before-its-News service, but for me it’s a bit too distracting to have running on a screen next to my code.

I’ve recently hooked up my second monitor to show me two Flickr real-time feeds: the first of which is the Commons, our group of accounts belonging to museums and various historical archives, which is motivational for me, reminding me that Flickr is really something special with real history being stored on it.

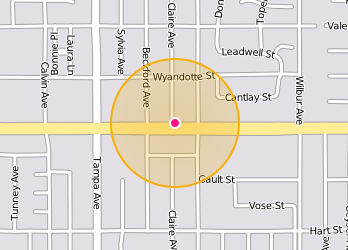

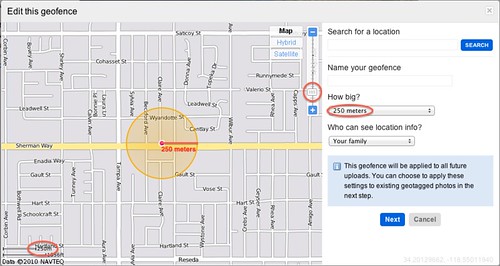

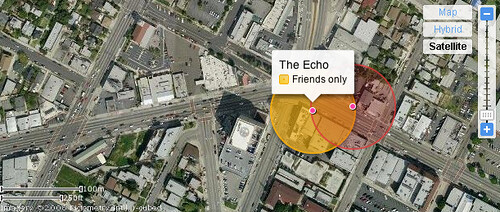

The second feed is what I consider my glimpse into what’s going on around me, the stuff that immediately affects me. So I’ve hooked up a push feed of photos geotagged a half kilometer around my house and a half-kilometer around the office.

Around my house, it’s usually people taking pictures of the Painted Ladies in Alamo Square but then I saw this picture come across. When your entire neighborhood is made up of densely-packed, 50+ year old homes made of questionable building materials, fires are scary things. I then jumped on Twitter and found out there was a multiple-alarm fire just a few blocks from my house. Also, around the office, just this past week, I saw pictures from San Francisco’s chapter of the OccupyWallStreet protests. The news wasn’t on the ground yet but here I was seeing live and extremely relevant things to me. Like they say, a picture can often say more than a thousand words.

Subscribing to a real-time stream of photos from a specific location is about as hyperlocal as you can be. Maybe I wouldn’t follow the right people on Twitter, or I wouldn’t check the local news site until later that evening, but people do take pictures of important things and events and post them to the site, and it doesn’t get much more timely than real-time.

Push is the opposite of Pull. And other obvious facts.

Ok, so back to the technology. Push is a completely different animal than our pull-based APIs. Consuming a real-time push feed flips most things about our API on its head. For starters, you’ll need a full-fledged, always-on web server that is exposed to the public Internet. And running on this web server will be a software that is based on a protocol described in a spec-with-a-capital-S. This demands a lot more from the developer than just a one-line curl.

Pull: Hit this URL and read Push: Read this spec and wait

With our regular API, you could basically treat it as a function call, that is “ask for something, get something”. With Push, now you’re implementing full protocol where the data will come *eventually*. Now you’ll need to wade through pages of dense and sometimes ambiguous instructions, so you can wait for us to tell you that your friend uploa a picture. Another big but obvious difference between the push and pull API is that with Push, your application only gets what we send to you. There’s no looking back in time. And due to this read-spec, write-code, and wait and wait some more debug cycle, it takes awhile to get the endpoint working correctly.

Personally, I have access to the Flickr codebase and I still had questions about how to make my endpoint do the right thing and it took me a few good hours one morning and more than one cup of coffee get working right.

So, seriously, why Push?

Because it’s fantastic. Well now that I’ve made Push seem a little scary, seriously, why Push?

The main thing, I think, is that you we give you things as they happen. If you are building a site that uses Flickr data, would you rather us send y data when we get it, or set up a bunch of cronjobs that fetch possibly non-changing data? Even if you like your cronjobs, what happens when yo have 100 users? 1000 users? 1000000? I do want to make one note about what we mean by “real-time.” As you saw from Neil’s presentation, there are levels of queues and consumers, each having a non-zero delay. We get you the photo data as soon as we can, but this might be a few seconds after we receive up to a minute or two. We understood we were building a real-time feed for photos uploaded to a website, and not sending direction data to a nuclear warhead. It was okay if things were off by a few seconds.

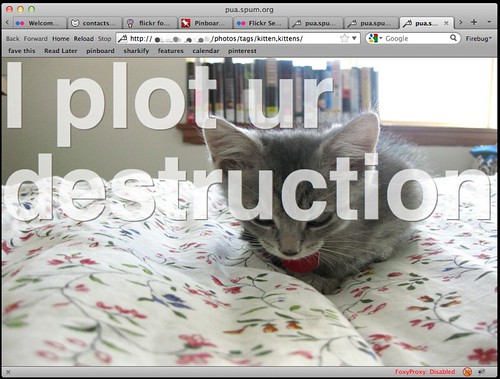

Flickr Globe

Also, receiving real-time photo updates are great for certain types of problems. So, we had the press in the office recently and we wanted to build something cool to run on our big screen behind the developers as we worked. Using the real-time push feed, one of our front-ends, Phil Dokas, was able to build an interactive globe, that charted where on Earth our photos were being uploaded from in real-time.

The great thing about this is, not only was it really pretty looking visualization, but it impressed upon me the magnitude of Flickr being used. The site felt really organic: you could see it being used and growing. For me, it was one of those ‘pale blue dot’ moments that Carl Sagan talked about, where you get a sense of the bigger picture of what you work on. When you work deep on the backend of Flickr, it’s easy to forget that these are real people from literally everywhere on Earth, uploading things they want to remember.

And using the pushed data made this really easy to build. And for reference, this about 3 minutes worth of publically geotagged photos.

I want to use it, BUT… …it sounds hard.

So, we think this stuff is awesome, and we want you to use it but we do understand it’s fiddly. The spec is dense and debugging is painful. So we’re going to give you a headstart on the whole thing. We’ve written a tiny web server that handles all the subscription handshake stuff and the parsing of photo data and we put it on GitHub, and open sourced it.

flickr-conduit

https://github.com/mncaudill/flickr-conduit

So we’re introducing, flickr-conduit which is a simple server written in node.js that handles the subscription stuff, keeps tabs on when to unsubsc users, and then finally receives the Flickr posts.

There’s a lot of moving parts to setting up a push endpoint: handling everything from getting users authenticating your API key, telling you what topics they’re interested in, setting up the subscription between your callback and Flickr, and then figuring out how to get those events to the use when Flickr sends them to you.

In the conduit repository, I’ve included a server that implements the push protocol and then just fires off events in JavaScript when Flickr sends something. I’ve also included a PHP application that handles authenticating the user, letting them pick their topics, and then finally showing them the photos they come in as just a demo of what it can do.

I didn’t have time to get a slide up for it, but Tom Carden from Bloom, took the PHP application I made and got it ready so that you can easily de it to heroku. It’s great and if you want to run your own conduit-server demo, I’d advise checking it out.

To start with, Conduit is the piece that represents your callback endpoint. You tell Flickr that this is your endpoint and it handles the rest of the subscription handshake stuff and when Flickr posts data to you server, it goes to your conduit server.

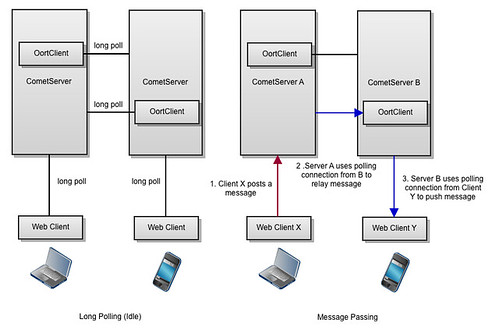

Pub/Sub all the way down

After I finished building this, I realized that unintentionally I took the idea of pub/sub, that is having a many subscribers expressing interesting in many published streams, and kept that spirit up all the way through to the end of my little node.js server and explaining this model sheds some light on how the conduit server works.

First, as Neil described, Flickr runs a pub/sub service. With conduit, you can run your own pub/sub server that talks to our published streams. And inside of conduit, we let your application code subscribe to certain events that conduit itself receives Flickr post events, and publishes them to your app code to handle how you wish. So there are technically 3 different pub/sub hubs before your app code, which sounds a little complicated, but if you grasp the mental model of how pub/sub works, you understand the full system. Also, keeping the pub/sub levels decoupled from each other provides a lot of simplicity and flexibility.

So, this is a small digression about architecture that I stumbled across while building, that when you’re building systems in the small, it sometimes makes a lot of sense to model the smaller system after the larger system. This may not be groundbreaking for some, but I just found it really neat.

I know ‘curl’. Now, tell me about Push.

With Conduit, we’ve removed a fair amount of the fiddly bits so you can get to doing the fun parts later. I’m going to step you through each of the steps of the whole pubsub flow to show you what each part does.

First, subscribe the user to a topic.

/callback?sub=user1234-contacts-faves

First, you’ll want to subscribe the user to a topic. Topics can be anything from their own uploads, to their contacts uploads, to their contacts faves and even photos being geotagged at a specific place.

During this subscription, you’ll specify a callback. Once you have conduit running, the callback URL you give Flickr in your subscription should p to the conduit server. One of the tricky parts of handling a multi-user pub/sub server is that you can have many users attached that are waiting for many different strea When Flickr posts a piece of data to your server, you only know the URL that it came in on, so the callback URL needs to be significant so you ro this piece of data to right consumer. One way to handle this is just create a globally unique identifier for a particular subscription, and tuck that in a database and then use that to ma URL to a subscribed user.

Personally, I’m a fan of reducing the number of moving parts as much as possible so I just use something simple, like the example, and make th URL itself identifiable. So for example if user 1234 wants to subscribe to her contact’s faves, the URL could simply be /user1234-contacts-faves. As long as this callback URL creation algorithm is repeatable, it’s easy to have a common dictionary throughout your app of how to handle a particular subscription.

The part of the play where Flickr asks a question.

Almost immediately, after making the API call to subscribe the user to a topic, Flickr will respond with a ‘subscribe’ request. Here Flickr is basically asking, “Hi. We just received a subscription request for this callback. Did you send this? If so, repeat the magic password back to me.” All this is described in detail in the spec but you don’t need to know any of that. Conduit handles all of this for you.

Debugging: the waiting game.

As I mentioned earlier, debugging the server was the most boring and thus most frustrating part of building an endpoint. I’d write some code, and to see if did the right thing, I had to wait for on my subscribed events happen and get sent to me. One shortcut I found is that I could subscribe to my own faves, and then I go through a test account and fave photos, therefore forcing events. This sped things up quite a bit.

Someone’s at the door!

/callback?sub=user1234-contacts-faves maps to user1234-contacts-faves

When an event does occur, Flickr will post it to your callback URL. Conduit then takes this callback URL and through whatever method you decide, creates an event name for it and then passes into a internal structure, In my running example, the callback you see up there gets the event name of “user1234-contacts-faves”. Like I said, you could do this mapping of URL to event name however you like, but this is simple and works for me.

The emitter emits.

user1234-contacts-faves

Conduit exposes something that node.js calls an “emitter’. It’s basically a pub/sub structure itself. So when a post comes in and you decode the callback URL into an event name, you tell Conduit about it. Effectively you’re saying, “Hey I just received some data and it has this event name. Give this data to anyone that is interested in user1234- contacts-faves.”

Finally, fun photo data.

Your application code registers with this emitter what events it’s interested in and when they come in, they do something fun with them. Now I’m going to explain a bit about the mini-app that I bundled with the conduit server to shows how this works.

Real-time stream

Flickr + conduit + node.js + socket.io. I wanted the final product to be a simple webpage that when my subscriptions were posted to, I’d see them simply shown up on the page. I glued a few fun tools together and got something really interesting.

PHP is my engine.

Handles the PuSH subscribing and printing out my JS. This entire sample app could easily have been written in JS, but PHP is what I what do all day at work and my JavaScript is a little rusty and I wanted to get something up and running quickly.

I was able to grab former-Flickr engineer, now Etsy CTO, Kellan Elliot-McCrae’s flickr.simple.php’s library to handle the authentication of the use well as posting of subscription requests.

So the PHP app I built, first authenticates the user with Flickr and then presents the list of real-time topics he or she can subscribe to. The user checks the topics they’re interested in and then gets redirected to a screen where the images will show up as they come in. I create the unique callback URLs and at the same time dump these event names into the Javascript on the page so that my socket.io code can my node server, what events it’d like to receive when they come in.

Meanwhile, back on the server…

As mentioned earlier, Flickr will post the photo data to the conduit server to my callback URLs. This callback URL directly becomes the event name, mapping exactly to the what the browser told socket.io that it was interested in. When this event comes in, conduit hands it to the emitter, which then broadcasts the event back out. The server-end of the socket then receives this and pumps down into the browser’s open arms.

And back to the browser.

The browser then receives the photo data and inserts it into the page. That was a whole lot of engineering just to get an image up on the screen and we know it, but with flickr-conduit, you can skip most of the details and just write fun code. This actually turns out to be a great way to explore Flickr.

On my extra monitor at work, I’ve subscribed to my contacts’ faves and all the updates from our Commons area (which includes museums and various archives) to get a weird blend of 100 year old pictures and things my friends find interesting.

So, in summary, this is the why and the how of Flickr added a real-time push feed on the cheap. Since we know the spec is dense, and there are several moving parts, we also hope flickr-conduit helps out new developers that will hopefully le to love our new real-time feeds as much as they love our existing pull feeds.

Thanks.

Related links:

conduit: https://github.com/mncaudill/flickr-conduit

tom’s conduit links: https://github.com/RandomEtc/flickr-conduit-front, https://github.com/RandomEtc/flickr-conduit-back

pubsubhubbub spec: http://pubsubhubbub.googlecode.com/svn/trunk/pubsubhubbub-core-0.3.html

pua: http://pua.spum.org (http://www.aaronland.info/weblog/2011/05/07/fancy/)

nolan’s conduit: http://nolancaudill.com/projects/conduit/

flickr globe: http://nolancaudill.com/~pdokas/flobe/

kellan’s post: http://laughingmeme.org/2011/07/24/getting-started-with-flickr-real-time-apis-in-php/

neil’s flickr post: http://code.flickr.com/blog/2011/06/30/dont-be-so-pushy/

This is a guest post by Teruhisa Haruguchi: Frontend Engineer in the Mobile & Presentation Service group. Main developer on the Flickr Photo Session project. Recent graduate from Cornell University with a concentration in Computer Graphics. Home country Japan.

This is a guest post by Teruhisa Haruguchi: Frontend Engineer in the Mobile & Presentation Service group. Main developer on the Flickr Photo Session project. Recent graduate from Cornell University with a concentration in Computer Graphics. Home country Japan.